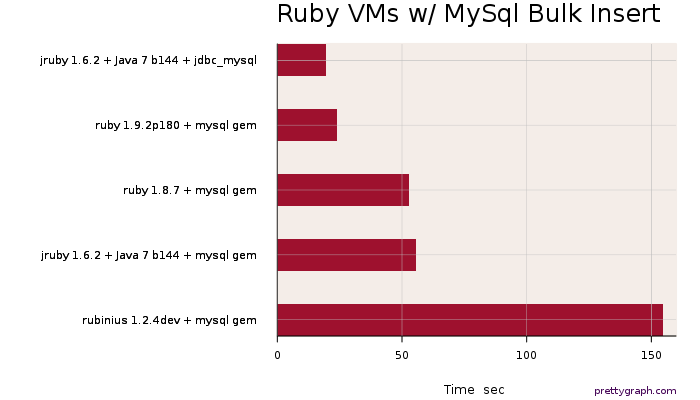

MySQL Bulk Insert Performance With JRuby and JDBC

I often have need to perform a bulk import of CSV files into MySQL for different projects. Recently, I made the move to the JRuby platform for one of my projects and have benefited from some great performance improvements for the types of work the code needs to perform. I decided that it was time now to examine the differences between Ruby versions when it comes to inserting bulk data into MySQL.

The Approach

With rvm once again ready to do my bidding, I proceeded to install the following gems into each of the versions of Ruby installed:

- fastercsv (1.5.3)

- mysql (2.8.1)

- sequel (3.24.1)

- jdbc-mysql (5.1.13) (JRuby only)

I then generated 10 files that each contain 10k rows of CSV data. I inserted these into a MyISAM table 5 times, for a total of 500k rows. Now, the results…

The Results

I was amazed at how much faster Ruby 1.9.2 with the MySQL gem was compared to JRuby with the same gem. However, once you move to the JDBC MySQL gem with JRuby, it edges out Ruby 1.9.2 slightly.

I was amazed at how much faster Ruby 1.9.2 with the MySQL gem was compared to JRuby with the same gem. However, once you move to the JDBC MySQL gem with JRuby, it edges out Ruby 1.9.2 slightly.

The Analysis

What do these results really mean? Here are a few take aways:

- Ruby 1.9.2 can insert 2x as many rows per second than Ruby 1.8.7

- JRuby with the MySQL JDBC gem can insert nearly 3x as many rows per second than Ruby 1.8.7

- JRuby with the MySQL JDBC gem performs 20% faster than Ruby 1.9.2 with bulk inserts

- (bonus) Rubinius 1.2.x and 2.0.0pre both have known issues with poor file I/O performance at the moment

Need to insert large amounts of bulk inserts where performance is key? Consider moving to JRuby and using the JDBC MySQL gem, or at a minimum move to Ruby 1.9.2 if you have not done so already.